1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

| #include <net/sock.h>

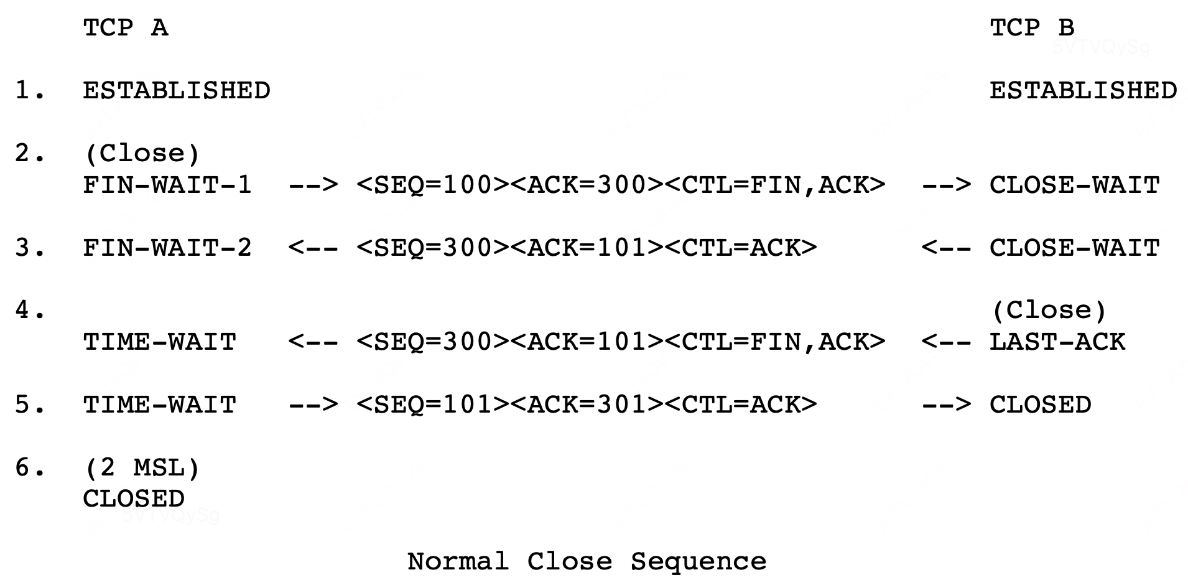

/*

TCP_ESTABLISHED = 1,

TCP_SYN_SENT = 2,

TCP_SYN_RECV = 3,

TCP_FIN_WAIT1 = 4,

TCP_FIN_WAIT2 = 5,

TCP_TIME_WAIT = 6,

TCP_CLOSE = 7,

TCP_CLOSE_WAIT = 8,

TCP_LAST_ACK = 9,

TCP_LISTEN = 10,

TCP_CLOSING = 11,

TCP_NEW_SYN_RECV = 12,

TCP_MAX_STATES = 13

每个 hook 点关注 进程的 pid, sk_state

*/

kprobe:tcp_close

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_close] pid: %d, state: %d, sock: %d, sk_max_ack_backlog: %d

",

pid, $sk->__sk_common.skc_state,

$sk, $sk->sk_max_ack_backlog);

}

kprobe:tcp_set_state

/ comm == "python" /

{

$sk = (struct sock*)arg0;

$ns = arg1;

printf("[tcp_set_state] pid: %d, state: %d, ns: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$ns, $sk);

}

kprobe:tcp_rcv_established

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_rcv_established] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$sk);

}

kprobe:tcp_fin

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_fin] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state, $sk);

}

kprobe:tcp_send_fin

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_send_fin] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state, $sk);

}

kprobe:tcp_timewait_state_process

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_timewait_state_process] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$sk);

}

kprobe:tcp_rcv_state_process

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_rcv_state_process] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$sk);

}

kprobe:tcp_v4_do_rcv

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_v4_do_rcv] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$sk);

}

kprobe:tcp_timewait_state_process

/ comm == "python" /

{

$sk = (struct sock*)arg0;

printf("[tcp_stream_wait_close] pid: %d, state: %d, sk: %d

",

pid, $sk->__sk_common.skc_state,

$sk);

}

|